Photometric redshifts - Introduction

Contents

Photometric redshifts - Introduction#

In this activity, we’re going to use decision trees to determine the redshifts of galaxies from their photometric colours. We’ll use galaxies where accurate spectroscopic redshifts have been calculated as our gold standard. We will learn how to assess the accuracy of the decision trees predictions and have a look at validation of our model.

We will also have a quick look at how this problem might be approached without using machine learning. This will highlight some of the limitations of the classical approach and demonstrate why a machine learning approach is ideal for this type of problem.

This activity is based on the scikit-learn example on Photometric Redshifts of Galaxies.

Magnitudes and colours#

We will be using flux magnitudes from the Sloan Digital Sky Survey (SDSS) catalogue to create colour indices. Flux magnitudes are the total flux (or light) received in five frequency bands (u, g, r, i and z).

Colours correspond to the wavelengths of the light we observe. Longer wavelengths appear red and shorter wavelengths appear blue or violet. Similarly, the astronomical colour (or colour index) is the difference between the magnitudes of two filters, i.e. u - g or i - z. You can read more about the filters and how the cameras work at http://voyages.sdss.org/preflight/light/filters/, https://www.sdss.org/instruments/camera/, and http://voyages.sdss.org/.

This index is one way to characterise the colours of galaxies. For example, if the u - g index is high then the object is brighter in ultra violet frequencies than it is in visible green frequencies.

Colour indices act as an approximation for the spectrum of the object and are useful for classifying stars into different types.

What data do we need?#

To calculate the redshift of a distant galaxy, the most accurate method is to observe the optical emission lines and measure the shift in wavelength. However, this process can be time consuming and is thus infeasible for large samples.

For many galaxies we simply don’t have spectroscopic observations.

Instead, we can calculate the redshift by measuring the flux using a number of different filters and comparing this to models of what we expect galaxies to look like at different redshifts.

In this activity, we will use machine learning to obtain photometric redshifts for a large sample of galaxies. We will use the colour indices (u - g, g - r, r - i and i - z) as our input and a subset of sources with spectroscopic redshifts as the training dataset.

Decision tree algorithms#

Decision trees are a tool that can be used for both classification and regression. In this module we will look at regression, however, in the next module we will see how they can be used as classifiers.

Decision trees map a set of input features to their corresponding output targets. This is done through a series of individual decisions where each decision represents a node (or branching) of the tree.

The following figure shows the decision tree our proverbial robot tennis player Robi used in the lectures to try and decide whether to play tennis on a particular day.

Each node represents a decision that the robot needs to make (or assess) to reach a final decision. In this example, the decision tree will be passed a set of input features (Outlook, Humidity and Wind) and will return an output of whether to play or not.

Decision tree for regression#

In decision trees for real-world tasks, each decision is typically more complex, involving measured values, not just categories.

Instead of the input values for humidity being Normal or High and wind being Strong or Weak we might see a percentage between 0 and 100 for humidity and a wind speed in km/hr for wind.

Our decisions might then be humidity < 40% or wind < 5 km/hr.

The output of regression is a real number.

So, instead of the two outputs of Play and Don't Play we have a probability of whether we will play that day.

The decision at each branch is determined from the training data by the decision tree learning algorithm. Each algorithm employs a different metric (e.g. Gini impurity or information gain) to find the decision that splits the data most effectively.

For now, just need to know that a decision tree is a series of decisions, each made on a single feature of the data. The end point of all the branches is a set of desired target values.

Decision trees in Python#

The inputs to our decision tree are the colour indices from photometric imaging and our output is a photometric redshift. Our training data uses accurate spectroscopic measurements.

The decision tree will look something like the following.

We can see how our calculated colour indices are input as features at the top and through a series of decision nodes a target redshift value is reached and output.

We will be using the Python machine learning library scikit-learn which provides several machine learning algorithms.

The scikit-learn decision tree regression takes a set of input features and the corresponding target values, and constructs a decision tree model that can be applied to new data.

Sloan Digital Sky Survey data#

We have provided the Sloan data in NumPy binary format (.npy) in a file called sdss_galaxy_colors.npy. The Sloan data is stored in a NumPy structured array and looks like this:

u g r i z ... redshift

19.84 19.53 19.47 19.18 19.11 ... 0.54

19.86 18.66 17.84 17.39 17.14 ... 0.16

... ... ... ... ... ... ...

18.00 17.81 17.77 17.73 17.73 ... 0.47

It also include spec_class and redshift_err columns we don’t need in this activity. The data can be loaded using:

import numpy as np

data = np.load('sdss_galaxy_colors.npy')

print(data[0])

The data[0] corresponds to the first row of the table above.

Individual named columns can be accessed like this:

import numpy as np

data = np.load('sdss_galaxy_colors.npy')

print(data['u'])

Each flux magnitude column can be accessed in the data array as data['u'], data['g'] etc.

The redshifts can accessed with data['redshift'].

Exercise: Features and targets#

Write a get_features_targets function that splits the training data into input features and their corresponding targets.

In our case, the inputs are the 4 colour indices and our targets are the corresponding redshifts.

Your function should return a tuple of:

features: a NumPy array of dimensions m ⨉ 4, where m is the number of galaxies;

targets: a 1D NumPy array of length m, containing the redshift for each galaxy.

The data argument will be the structured array described on the previous slide.

The u flux magnitudes and redshifts can be accessed as a column with data['u'] and data['redshift'].

The four features are the colours u - g, g - r, r - i and i - z. To calculate the first column of features, subtract the u and g columns, like this:

import numpy as np

data = np.load('sdss_galaxy_colors.npy')

print(data['u'] - data['g'])

The features for the first 2 galaxies in the example data should be:

[[ 0.31476 0.0571 0.28991 0.07192]

[ 1.2002 0.82026 0.45294 0.24665]]

And the first 2 targets should be:

[ 0.539301 0.1645703]

Hint: set up your features array with zeros

You can set up the features array with zeros and then set each column to the corresponding calculated feature.

features = np.zeros((data.shape[0], 4))

features[:, 0] = data['u'] - data['g']

import numpy as np

def get_features_targets(data):

targets = data['redshift']

features = np.array([data['u'] - data['g'],

data['g'] - data['r'],

data['r'] - data['i'],

data['i'] - data['z']

])

return features.T, targets

# load the data

data = np.load('data/sdss_galaxy_colors.npy')

# call our function

features, targets = get_features_targets(data)

# print the shape of the returned arrays

print(features[:2])

print(targets[:2])

[[0.31476 0.0571 0.28991 0.07192]

[1.2002 0.82026 0.45294 0.24665]]

[0.539301 0.1645703]

Exercise: Decision tree regressor#

We are now going to use our features and targets to train a decision tree and then make a prediction. We are going to use the DecisionTreeRegressor class from the sklearn.tree module.

The decision tree regression learning algorithm is initialised with:

dtr = DecisionTreeRegressor()

We will discuss some optimisations later in the activity, but for now we are just going to use the default values.

To train the model, we use the fit method with the features and targets we created earlier:

dtr.fit(features, targets)

The decision tree is now trained and ready to make a prediction:

predictions = dtr.predict(features)

predictions is an array of predicted values corresponding to each of the input variables in the array.

Your task is to put this together for our photometric redshift data. Use the comments to guide your implementation.

Finally, print the first 4 predictions. It should print this:

[ 0.539301 0.1645703 0.04190006 0.04427702]

import numpy as np

from sklearn.tree import DecisionTreeRegressor

# load the data and generate the features and targets

data = np.load('data/sdss_galaxy_colors.npy')

features, targets = get_features_targets(data)

# initialize model

dtr = DecisionTreeRegressor()

# train the model

dtr.fit(features, targets)

# make predictions using the same features

predictions = dtr.predict(features)

# print out the first 4 predicted redshifts

print(predictions[:4])

[0.539301 0.1645703 0.04190006 0.04427702]

Evaluating our results: accuracy#

So we trained a decision tree! Great…but how do we know if the tree is actually any good at predicting redshifts?

In regression we compare the predictions generated by our model with the actual values to test how well our model is performing. The difference between the predicted values and actual values (sometimes referred to as residuals) can tell us a lot about where our model is performing well and where it is not.

While there are a few different ways to characterise these differences, in this tutorial we will use the median of the differences between our predicted and actual values. This is given by:

Where \(| |\) denotes the absolute value of the difference.

Exercise: Calculating the median difference#

In this problem we will implement the function median_diff. The function should calculate the median residual error of our model, i.e. the median difference between our predicted and actual redshifts.

The median_diff function takes two arguments – the predicted and actual/target values. When we use this function later in the tutorial, these will corresponding to the predicted redshifts from our decision tree and their corresponding measured/target values.

The median of differences should be calculated according to the formula above.

import numpy as np

# write a function that calculates the median of the differences

# between our predicted and actual values

def median_diff(predicted, actual):

return np.nanmedian(np.abs(predicted-actual))

# load testing data

targets = np.load('data/targets.npy') # currently missing

predictions = np.load('data/predictions.npy') # currently missing

# call your function to measure the accuracy of the predictions

diff = median_diff(predictions, targets)

# print the median difference

print("Median difference: {:0.3f}".format(diff))

Evaluating our results: Validation#

We previously used the same data for training and testing our decision trees.

This gives an unrealistic estimate of how accurate the model will be on previously unseen galaxies because the model has been optimised to get the best results on the training data.

The simplest way to solve this problem is to split our data into* training* and testing subsets:

# initialise and train the decision tree

dtr = DecisionTreeRegressor()

dtr.fit(train_features, train_targets)

# get a set of prediction from the test input features

predictions = dtr.predict(test_features)

# compare the accuracy of the pediction againt the actual values

print(calculate_rmsd(predictions, test_targets))

This method of validation is the most basic approach to validation and is called held-out validation. We will use the \(med\_diff\) accuracy measure and hold-out validation in the next problem to assess the accuracy of our decision tree.

Exercise: Validating our model#

In this problem, we will use median_diff from the previous question to validate the decision tree model.

Your task is to complete the validate_model function.

The function should split the features and targets into train and test subsets, like this 50:50 split for features:

split = features.shape[0]//2

train_features = features[:split]

test_features = features[split:]

Your function should then use the training split (train_features and train_targets) to train the model.

Finally, it should measure the accuracy of the model using median_diff on the test_targets and the predicted redshifts from test_features.

The function should take 3 arguments:

model: the decision tree regressor;

features - the features for the data set;

targets - The targets for the data set.

Hint: keep features and targets together!

When splitting the features and targets be careful to ensure that the train_features have the correct train_targets, i.e. train_features[0] corresponds to train_targets[0] etc.

Exploring the output tree#

But what does the decision tree actually look like?

You can see how the decision is made at each node as well as the number of samples which reach that node.

import numpy as np

import pydotplus as pydotplus

from sklearn.tree import DecisionTreeRegressor, export_graphviz

data = np.load('data/sdss_galaxy_colors.npy')

features, targets = get_features_targets(data)

# Initialize model

dtr = DecisionTreeRegressor(max_depth=3) # We will come to this input in the next tutorial

# Split the data into training and testing

split_index = int(0.5 * len(features))

train_features = features[:split_index]

train_targets = targets[:split_index]

dtr.fit(train_features, train_targets)

dot_data = export_graphviz(dtr, out_file=None,feature_names=['u - g', 'g - r', 'r - i', 'i - z'])

graph = pydotplus.graph_from_dot_data(dot_data)

graph.write_jpg("output/decision_tree.jpg")

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

/Users/arunkannawadi/Desktop/code/data-driven-astronomy/data_driven_astronomy/5.ipynb Cell 23 in <cell line: 2>()

<a href='vscode-notebook-cell:/Users/arunkannawadi/Desktop/code/data-driven-astronomy/data_driven_astronomy/5.ipynb#ch0000027?line=0'>1</a> import numpy as np

----> <a href='vscode-notebook-cell:/Users/arunkannawadi/Desktop/code/data-driven-astronomy/data_driven_astronomy/5.ipynb#ch0000027?line=1'>2</a> import pydotplus as pydotplus

<a href='vscode-notebook-cell:/Users/arunkannawadi/Desktop/code/data-driven-astronomy/data_driven_astronomy/5.ipynb#ch0000027?line=2'>3</a> from sklearn.tree import DecisionTreeRegressor,export_graphviz

<a href='vscode-notebook-cell:/Users/arunkannawadi/Desktop/code/data-driven-astronomy/data_driven_astronomy/5.ipynb#ch0000027?line=4'>5</a> def get_features_targets(data):

ModuleNotFoundError: No module named 'pydotplus'

The median of differences of \(\approx 0.02\). This means that half of our galaxies have a error in the prediction of \(\lt 0.02\), which is pretty good. One of the reason we chose the median of differences as our accuracy measure is that it gives a fair representation of the errors especially when the distribution of errors is skewed. The graph below shows the distribution of residuals (differences) for our model along with the median and interquartile values.

As you can tell the distribution is very skewed. We have zoomed in here, but the tail of the distribution goes all the way out to 6.

The effect of training set size#

The number of galaxies we use to train the model has a big impact on how accurate our predictions will be. This is the same with most machine learning methods: the more data that they are trained with, the more accurate their prediction will be.

Here is how our median difference changes with training set size:

Training galaxies median_diff

50 0.048

500 0.026

5000 0.023

50000 0.022

Understanding how the accuracy of the model changes with sample size is important to understanding the limitations of our model. We are approaching the accuracy limit of the decision tree model (for our redshift problem) with a training sample size of 25,000 galaxies.

The only way we could further improve our model would be to use more features. This might include more colour indices or even the errors associated with the measured flux magnitudes.

Before machine learning#

Before machine learning, we would have tried to solve this problem with regression — by constructing an empirical model to predict how the dependent variable (redshift) varies with one or more independent variables (the colour indices).

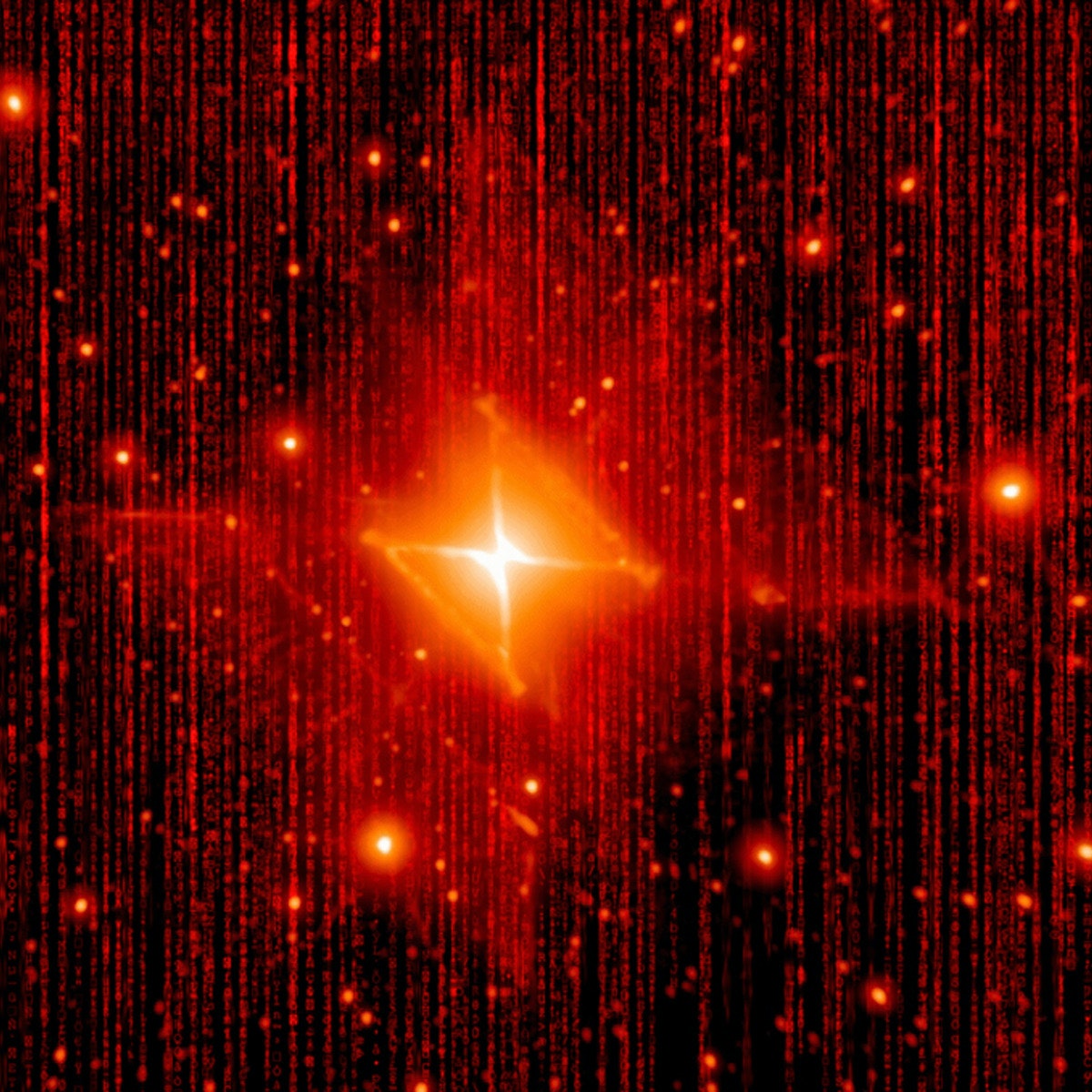

A plot of how the colours change with redshift tells us that it is quite a complex function, for example redshift versus u - g:

One approach would be to construct a multi-variate non-linear regression model. Perhaps using a least squares fitting to try and determine the best fit parameters. The model would be quite complex; based on the above plot, a dampened inverse sine function would be a good starting point for such a model.

While we could try such an approach the function would be overly complex and there is no guarantee that it would yield very promising results. Another approach would be to plot a colour-index vs colour-index plot using an additional colour scale to show redshift. The following plot is an example of such a plot.

It shows that we get reasonably well defined regions where redshifts are similar. If we were to make a contour map of the redshifts in the colour index vs colour index space we would be able to get an estimate of the redshift for new data points based on a combination of their colour indices. This would lead to redshift estimates with significant uncertainties attached to them.

In the next problem you will re-create the Colour-index vs Colour-index plot with redshift as colour bar.

Exercise: Colour Colour Redshift plot#

Your task here is to simply recreate the plot above.

You should use the pyplot module of matplotlib which has already been imported and can be accessed through plt.

In particular you can use the plt.scatter() function, with additional arguments s, c and cmap.

We are interested in the u - g and r - i colour indices.

You can make use of the plt.colorbar() function to show your scatter plots colour argument(c) to a colour bar on the side of the plot.

Make sure you implement x and y labels and give your plot a title.

Gotchas

In order to get your plot working with colours you will need to set the optional parameter lw=number.

There are a lot of small points and unless we set the linewidth to zero the line surrounding each point will block out the point itself.

You should also specify the size of your plot points with the additional call arguments s=number.

A reasonable place to start might be 1, but see what it looks like.

import numpy as np

from matplotlib import pyplot as plt

# Complete the following to make the plot

data = np.load('data/sdss_galaxy_colors.npy')

# Get a colour map

cmap = plt.get_cmap('YlOrRd')

# Define our colour indexes u-g and r-i

x = data['u'] - data['g']

y = data['r'] - data['i']

# Make a redshift array

z = data['redshift']

# Create the plot with plt.scatter and plt.colorbar

number = 0.8

plt.scatter(x,y,c=z,s=number, cmap=cmap, lw=number)

# Define your axis labels and plot title

plt.xlabel('u-g')

plt.ylabel('r-i')

# Set any axis limits

plt.xlim([-0.5, 2.5])

plt.ylim([-0.49, 1.0])

plt.show()

Summary#

In this activity, we implemented some decision tree models to help predict the redshift of galaxies based on their measured colour indices. We learnt that there are several ways to assess the accuracy of the model and in our validations we used the median of the residuals.

We touched on how the problem might be approached without machine learning and found that there isn’t too much available that can be simply used.

We also learnt why we need to cross validate the model and that this is done by splitting the data into seperate training and testing subsets. We will look at k-fold cross validation in the next tutorial; where the testing and training are changed to include various combinations of \(k\) seperate subsets.

In the next tutorial we will also explore how decision trees have a tendency to overfit the data.